Ensemble Classifiers Cores |

Ensemble learning requires creation of a set of individually trained classifiers, typically decision trees (DTs) or neural networks, whose predictions have to be combined during the process of classification of previously unseen instances. Although simple, this idea has proved to be effective, producing systems that are more accurate then single a classifier.

All ensemble systems consist of two key components.

First component is used to calculate the classifications of the current instance for every ensemble member.

A second module is then needed to combine the classifications of individual classifiers that make up the ensemble into one single classification, in such a way that the correct decisions are amplified, and incorrect ones are canceled out.

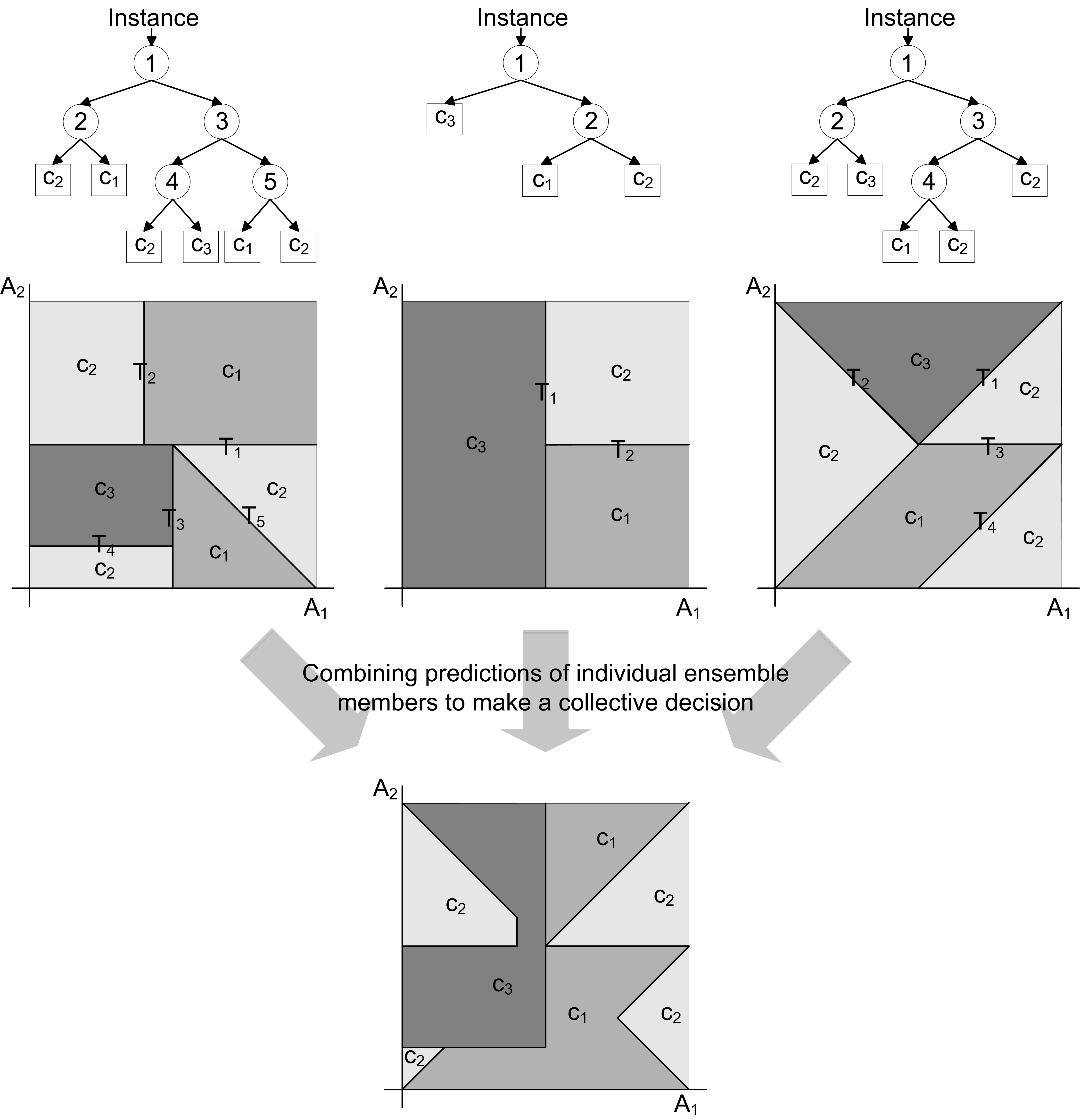

This concept is illustrated in the Figure below.

Basic structure of a decision tree based ensemble classifier with the possible classification regions in the case of two attribute classification problem.

In the figure above a three member decision tree ensemble classifier is shown. Each of the ensemble members classifies the incomming instance. These classifications are then combined, using majority voting for example, to make up the combined classification decision.

The main advantage of ensemble classifiers over single classifiers is in higher accuracy and greater robustness. The price to be paid is large amounts of memory to store the ensemble classifier and high computing power. This is because ensemble classifiers typically combine 30 or more individual classifiers, which means that 30+ times more memory and computational power is required if we want to get the same performance in classification speed as with the single classifier.

Ensemble classifiers are typically implemented in software. But in applications that require rapid classification or ensemble creation, hardware implementation is the only solution.

So-Logic is one of the first companies to offer IP cores that enable implementation of ensemble classifiers directly in hardware. Our portfolio of ensemble learning IP cores includes:

- Ensemble Evaluation Cores - IP cores that can be used to calculate the predictions of individual members of the ensemble

- Combination Rules Cores - IP cores that are used to combine the predictions of individual members in order to make a collective decision

- Ensemble Inference Cores - IP cores that are able to create ensemble classifiers from supplied training data